LSTM

The long short-term memory is an architecture well-suited to learn from experience to classify, process and predict time series when there are very long time lags of unknown size between important events.

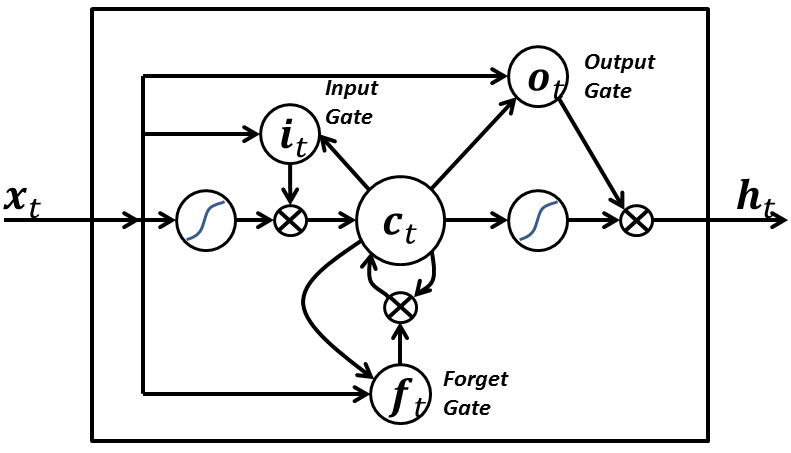

To use this architecture you have to set at least one input node, one memory block assembly (consisting of four nodes: input gate, memory cell, forget gate and output gate), and an output node.

var myLSTM = new architect.LSTM(2,6,1);

Also you can set many layers of memory blocks:

var myLSTM = new architect.LSTM(2, 4, 4, 4, 1);

That LSTM network has 3 memory block assemblies, with 4 memory cells each, and their own input gates, memory cells, forget gates and output gates.

You can pass options if desired like so:

var options = {

memoryToMemory: false, // default is false

outputToMemory: false, // default is false

outputToGates: false, // default is false

inputToOutput: true, // default is true

inputToDeep: true // default is true

};

var myLSTM = new architect.LSTM(2, 4, 4, 4, 1, options);

While training sequences or timeseries prediction to a LSTM, make sure you set the clear option to true while training. See an example of sequence prediction here.

This is an example of character-by-character typing by an LSTM: JSFiddle